#6A Regulating Deepfakes - What They Are and Why You Should Care: Part I

While exploring social media, YouTube, or your other favorite cyberspace destination, have you stumbled upon artworks or photographs that come to life like the talking paintings in Harry Potter? Where the Mona Lisa moves in her portrait frame, grinning with teeth and smile lines? Or where an old photograph of Abraham Lincoln greets you with a tip of his iconic, black silk top hat, then winks at you through the screen? Or maybe you’ve watched Star Wars: Rogue One and wondered, “How did they find a doppelganger of young Carrie Fisher after she passed away?” The truth is you, my friend, have encountered deepfake technology.

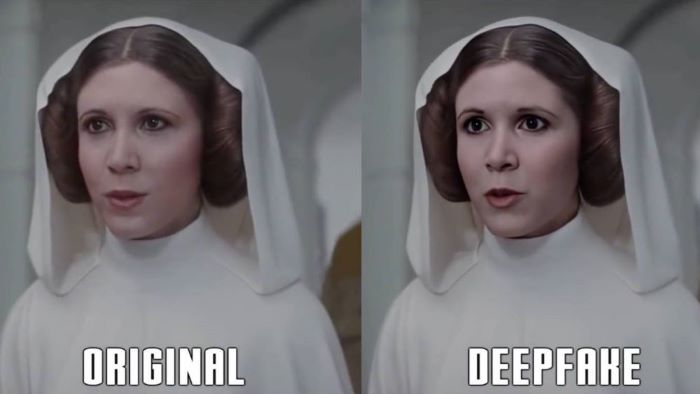

In Rogue One: A Star Wars Story, the production team used CGI to recreate the face of young Princess Leia. However, the CGI (Original) version was not a perfect match, so they overlayed a deepfake image of Carrie Fisher to correct the image.

Zwentner

The entertainment industry is not the only sector reaping the benefits (and harms) of deepfake technology. Healthcare, too, is beginning to use this technology to serve patients, enhance research, protect privacy, and better analyze medical data.

In this two-part series, we will explore what deepfakes are, their applications in healthcare, and what we can do to (realistically) regulate deepfakes.

First, we are diving into the technical side of deepfakes to figure out what they are, how they are created, and why this technology matters.

What are Deepfakes?

According to Britannica, “Deepfakes [are] synthetic media, including images, videos, and audio, generated by artificial intelligence (AI) technology that portray something that does not exist in reality or events that have never occurred”. The term “deepfake” first came about in 2017 when a Reddit user created the subreddit thread “r/deepfakes” containing pornographic videos edited with the faces of celebrities superimposed on the individuals originally recorded.

A deepfake is created by overlaying a video with a desired image. Here, a man has Tom Cruise’s face superimposed over his own as he changes his facial expressions.

Verge

While the entertainment industry had been working on CGI face/body swapping technology for use in movies and TV, the development of easy access apps and platforms for creating deepfake images at home has gone viral since 2017. You may have already used deepfake technology in your own life – uploading pictures of yourself into apps that face swap your image into your favorite movies – or create a high school photo as if you went to school in the 1980s. But you are probably wondering, “How do they work?”

How do they work?

Deepfakes are created using a combination of computer-based machine learning programs that allow the computer to detect similar patterns in a dataset. The computer then retains memory of this “training” and applies its pattern detection across many new datasets. These programs include Convolutional Neural Networks (CNNs), which allow computers to map spatial dimensions and patterns. Such CNNs are commonly used in facial recognition.

Donkey with horse

AnimalHow.com

Let’s say you train a computer on images of horses and donkeys, and then you ask the computer to determine the differences between them and to provide you with 50 new images of only horses. You, as an observer, may know very well how to determine the difference between the two, but telling the computer descriptors like “long snout”, “long-haired tail”, and “pointed ears” may not be very helpful to the computer. But a CNN program can connect the spatial dimensions, configuration, and relationship of these features together to determine, as a whole, if the image is of a donkey or of a horse with high specificity to their features, color, dimensions, and more.

Deepfakes can then be created using this CNN training and a program called Generative Adversarial Networks (GANs). Using GANs, computers can determine if an image or video of a person was captured from the world or synthetically generated by the computer itself. In GANs systems, there are two neural networks: the generator, which will attempt to create an image of a horse, and the discriminator, which will determine if the image is accurate and precise compared to the original dataset of horse images. If the image is inaccurate, it will be rejected by the discriminator, and the generator will try again. This ongoing cycle of refinement will continue until the computer can no longer distinguish the generated image from one in the original training dataset.

What’s the big deal?

What’s important to remember is that computers do not “think for themselves”. They are predictive models that can either do their job very well or very poorly – creating images that seem fanciful or ridiculous – like monkeys playing chess with a board containing too many squares. One issue with generative artificial intelligence is its ability to “hallucinate” or create things that do not reflect physical reality, historical record, or verifiable information.

Generative AI image of "Monkeys Playing Chess" with "hallucinated" portions that does not reflect the structure of gameplay of chess in the physical world

www.freepik.com

What’s interesting about deepfakes is not only the ability to determine, but whether we can discern what is real from what is false. Courts, specifically, are deliberating whether photo and video evidence should be admissible in the era of deepfakes because deepfake detection is still inconsistent, as demonstrated in IBM’s most recent tests of its detection systems between real political videos versus generated ones.

Because of this, we need to take a step back and decide what regulations are needed to protect individuals whose data is being used to generate deepfakes, especially whether it is with or without their explicit consent. We also need to consider if regulatory standards should be put in place for how deepfake-generating models are trained, to ensure that the dataset is representative of all people, especially if applied in the healthcare setting.

Do Deepfakes always have negative outcomes?

Not necessarily. In the next blog post of this series, we will dig deeper into the many ways deepfake technology is helping physicians, dentists, surgeons, and researchers better care for their patients.

Author

— by Alyssa Montalbine, MS, Graduate Student, Emory and Georgia Tech, 7/2025

_______________________

Continue the conversation! Please email us your comments to post on this blog. Enter the blog post # in your email Subject.