#6B Regulating Deepfakes - Balancing Perspectives in Healthcare: Part II

You may not realize it yet, but deepfakes are revolutionizing healthcare! Artificial intelligence and telehealth are transforming patient-physician communications, tailoring treatments for patient-specific care, catalyzing research for medicinal treatments… and deepfake technology is no exception. However, and as with any technology, not every use of a tool results in a positive outcome. In this post, we will delve into some of the many ways deepfakes are elevating the practice of healthcare, and some of the potential consequences that can arise in some up-and-coming commercial applications. In Part II of this series, we will balance the views on deepfakes in healthcare by examining some healthcare deepfakes that will be extremely helpful (or harmful) in the coming years.

Deepfakes in Data Privacy Protection

The age of “big data” has made it possible for medical professionals and researchers to have extensive patient data, which could be used to further create life-saving treatments, as AI can detect patterns that humans alone may be unable to. Yet, much of patient data outside of the classic research setting, especially imaging, is unavailable for secondary data analysis under the protections of HIPPA, and data protections become increasingly important as hospitals and other healthcare databases are attacked and ransomed by hackers. Secondary analysis occurs when research is conducted on previously collected data to determine additional underlying patterns and phenomena. While many medical breakthroughs have come through secondary analysis, one concerning aspect is making sure there is oversight in who has access to the data and if it can be properly protected, anonymized, and encrypted.

Deepfakes have potential to assist in creating images based on real patients’ data, but that can be synthesized and anonymized for the purpose of research and education. These images could further the ability to understand disease mechanisms and treatment feasibility in a variable set of populations, while keeping the training data secure and protected. However, if deepfake “data representative images” are created, there would need to be safeguards including (i) a statistically significant subject pool, (ii) transparent reporting for researchers on important qualitative/quantitative factors related to the training set, and (iii) restrictions of the deepfakes from “hallucinating,” i.e., generating images that do not conform to the reality of the data set.

Deepfakes in Physician-Patient Communications

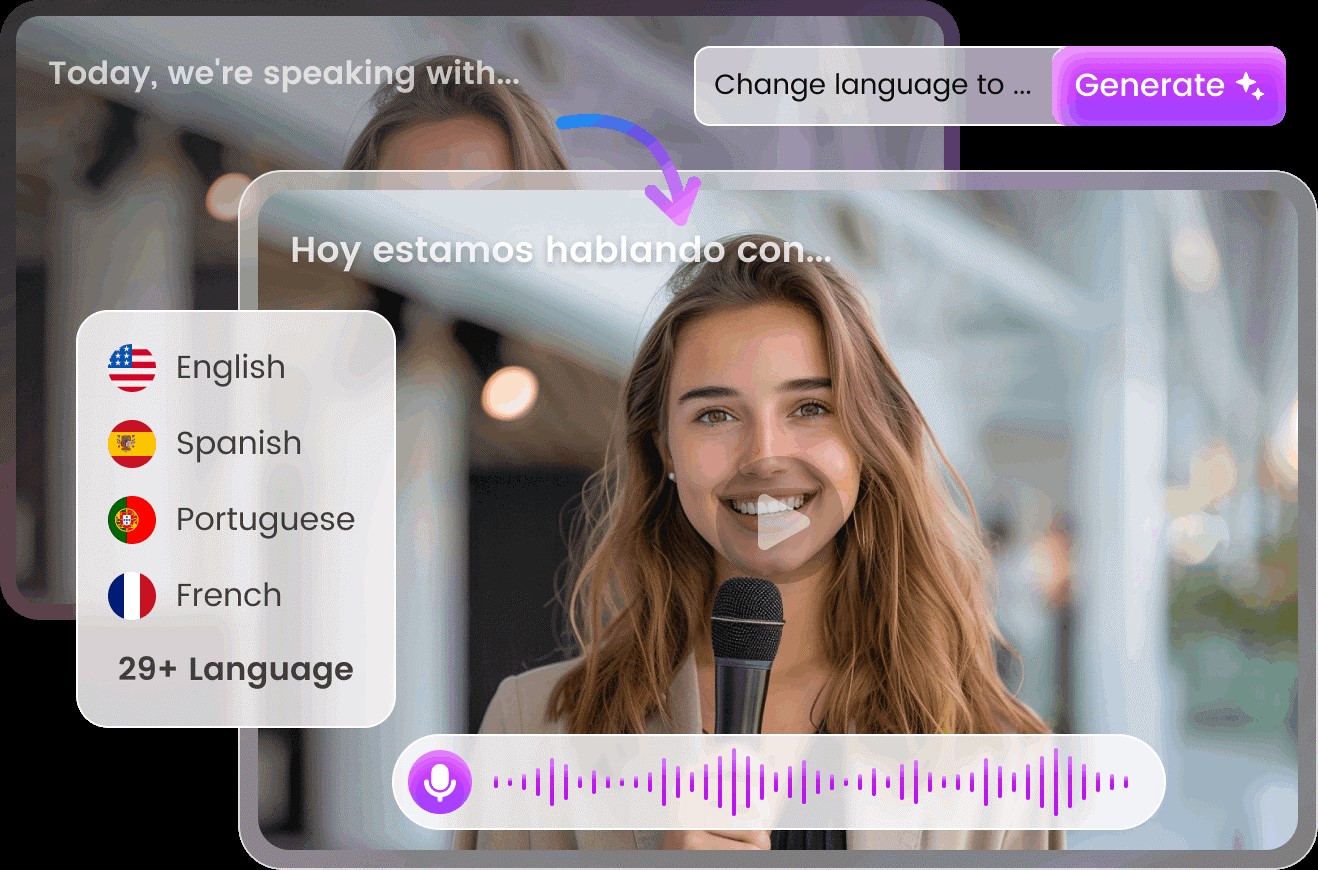

The entertainment industry’s use of AI and deepfakes has made it possible to quickly translate up-and-coming films and cinema classics through the generation of new voices and lip-movements at the push of a button. You can now hear and see Marlon Brando giving his classic speech in The Godfather as deepfake technology recreates Brando’s voice and lips to match the movements and cadence of French, Japanese, Swahili, or whatever language suits your fancy. This is now known as “AI-dubbing” and it is currently being used by streaming platforms like Netflix or on apps available to the general public.

AI-dubbing of a personal video into multiple languages

In the medical setting, this can be especially helpful for doctors offering telehealth appointments to communities across the world that lack sufficient access to quality medical care and translators. In real time a doctor, speaking in English could have the diagnosis translated to a different language, with lip movements included, so they can ensure their patient understands their diagnosis and that the patient and physician can have an ongoing conversation to ensure medical clarity. The big oversight that is necessary for this would be the “human in the loop” aspect, where the doctor and patient are both able to verify that the deepfake technology is translating what they have said accurately.

Additionally, significant time in the development of this kind of platform would be needed to ensure that the AI can detect and understand different accents and dialects to aid doctors in explaining medical terminology in a way that is accessible and understandable to the patient in a different language. Overall, deepfakes can transform the way we approach health literacy and healthcare access, but human oversight is still needed in the development of these early-stage platforms.

Deepfake Doctors: Dependable or Deceptive?

An emerging issue is the concept of a “deepfake doctor”, a “medical professional” generated by AI to administer your next appointment. Your “doctor” could have the face of your favorite celebrity, your childhood physician, or the face generated by AI of someone that does not actually exist. While it might seem cool or unsettling for this to be possible, many companies – like NVIDIA’s partnership with Hippocratic AI - are investing to make it happen. So, let’s take a closer look at some areas of concern in this phenomenon.

Let’s imagine that deepfake doctors could be reliable, e.g., the data training sets could be reviewed, and companies can verify that the “AI doctor” has the same working knowledge and accreditation as if they attended one of the best medical schools in the world. In theory, deepfake doctors could function at similar or even better levels as human doctors in diagnosing disorders.

But then there is the question of deception: Is the use of the face and voice of someone you trust or admire – like Morgan Freeman – manipulative? Human psychology shows that we are more likely to decide if someone is trustworthy based on their facial cues and voice intonations. Morgan Freeman has a twinkle in his eyes and a soothing voice that would be a perfect model for a “deepfake doctor” to replicate. But, Morgan Freeman – the real Morgan Freeman – would not be your actual doctor. And bombarding Morgan Freeman’s personal email with your medical questions might make him file a restraining order against you.

Is this a distortion of reality that makes it difficult to verify who gave you this information? Will the “deepfake doctor” retain a memory of what it said to you, the patient? Will it forget? Can the deepfake doctor accept liability for a bad diagnosis or medical misinformation?

TikTok accounts posting videos of “doctors” that are claiming to have medical expertise, while posing under fraudulent identities and spreading medical misinformation, as reported by the New York Post.

This is already a problem that is running rampant on TikTok and other social media platforms, where “doctors” are suggesting treatments and explaining diseases that are not in line with reality. Many viewers are raising the red flag as lagging body movements, lip movements, and other visuals are indicative that these are deepfakes created to spread medical misinformation and sell questionable health products, as reported by the New York Post. What’s more, the images and voices of prominent, well-known, and respected doctors, like endocrinologist Dr. Jonathan Shaw, have already been used to push incomplete, misleading, and false medical information to the public.

Will the normalization of deepfake doctors – in our era of short attention spans – increase the likelihood that these malicious fraudsters are believed? Will “deepfake doctors” only cause further detriment to patient trust in the healthcare system? Is this risk even worth the commercial investment? You, the future patient of deepfake doctors, will need to decide.

Author

— by Alyssa Montalbine, MS, Graduate Student, Emory and Georgia Tech, 7/2025

_______________________

Continue the conversation! Please email us your comments to post on this blog. Enter the blog post # in your email Subject.